The main purpose of the GNU command-line utility tool Wget is to download files from the internet. The HTTP, HTTPS, and FTP protocols are supported.

Wget is made to perform efficiently and dependably despite sluggish or erratic network connections. Therefore, if a download is interrupted due to a network fault before finishing, Wget automatically picks up where it left off. until the entire file has been successfully recovered, this process is repeated.

This article will explore Wget’s smooth proxy server integration, which opens up a myriad of options for effective data retrieval.

Prerequisites and Installation

Devs, including you young devs, should read this essay. However, it is advised to take the following actions in order to get the most out of these materials:

- Familiarity with the commands and parameters of Linux and Unix.

- You need to have Wget installed.

Open a terminal and type this line to see if Wget is installed:

$ wget -VReturns the version if it is available. If not, use the instructions below to download wget for Windows or Mac.

Download wget on Mac

Wget can be installed on Macs using a package manager like Homebrew, which is advised.

Run the following command to install wget using Homebrew:

$ brew install wgetRunning the previous command once more to view the most recent version will show whether the installation was successful.

Download wget on Windows

- Install Wget after downloading it for Windows.

- The C:Windows:System32 folder should now contain the wget.exe file.

- Run Wget at the command prompt (cmd.exe) to confirm the installation was successful.

Download a single file

Run this line to download a regular file:

$ wget https://example.com/scraping-bee.txtIf the most recent version on the server is more recent than the local copy, you can even configure wget to retrieve the data. To maintain a timestamp of the initial extraction and any subsequent ones, you should extract the file using -S before running the previous command.

$ wget -S https://example.com/scraping-bee.txtNext, you can execute: to see if the file has been edited and download it if it has:

$ wget -N https://example.com/scraping-bee.txtRun this line if you want to download content and save it as the HTML page’s title:

wget '...' > tmp &&

name=$(gawk '...' tmp) &&

mv tmp "$name"In name, use / as necessary.

Download a File to a Specific Directory

Simply substitute your desired output directory location for PATH when saving the file.

$ wget ‐P <PATH> https://example.com/sitemap.xmlDefine Yourself as a User-Agent

$ wget --user-agent=Chrome https://example.com/file.htmlLimit Speed

Not crawling too quickly is a part of the proper scraping technique. Thankfully, Wget implementation of the –wait and –limit-rate commands can assist with that.

--wait=1 \\ Wait 1 second between extractions.

--limit-rate=10K \\ Limit the download speed (bytes per second)Convert Links on a Page

Convert the HTML links so that they continue to function in your local version. (For instance, path://example.com -> path://localhost:8000)

$ wget --convert-links https://example.com/pathExtract Multiple URLs

Create a urls.txt file first, then add all the desired URLs to it.

https://example.com/1

https://example.com/2

https://example.com/3Run the subsequent command to extract every URL.

$ wget -i urls.txtThe most utilized Wget commands are covered by that.

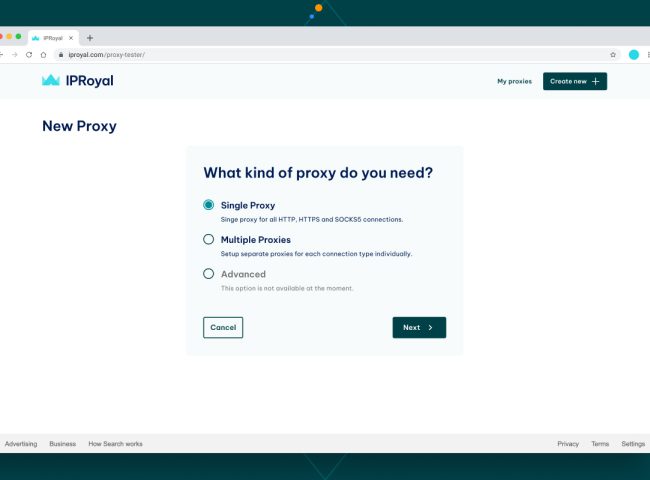

To use a proxy with Wget

First, find the Wget initialization file in either $HOME/.wgetrc (for a single user) or /usr/local/etc/wgetrc (global, for all users). You can examine the documentation here to see an example .wgetrc file used for Wget initialization.

- Add the following lines to the initialization file:

https_proxy = http://[Proxy_Server]:[port]

http_proxy = http://[Proxy_Server]:[port]

ftp_proxy = http://[Proxy_Server]:[port]- Set Proxy Variables. if you don’t have a proxy yet we recommend you Proxy-cheap.

The following environment variables are recognized by Wget for specifying proxy location:

- The URLs of the proxies for HTTP and HTTPS connections should be contained in the variables http_proxy and https_proxy, respectively.

- For FTP connections, the variable ftp_proxy should contain the proxy’s URL. Both http_proxy and ftp_proxy are frequently set to the same URL.

- A list of domain extensions that no_proxy should not be used for, separated by commas.

In addition to the environment variables, Wget internal commands ‘–no proxy’ and ‘proxy = on/off’ can be used to specify the proxy’s location and settings. Be aware that even if the appropriate environment variables are present, this can prevent the use of the proxy.

Run the following command to set the variables in the shell:

$ export http_proxy=http://[Proxy_Server]:[port]

$ export https_proxy=$http_proxy

$ export ftp_proxy=$http_proxyFinally, add the following line(s) to your /etc/profile or your ~/.bash_profile:

export http_proxy=http://[Proxy_Server]:[port]

export https_proxy=http://[Proxy_Server]:[port]

export ftp_proxy=http://[Proxy_Server]:[port]Some proxy servers demand authorization before allowing access, typically in the form of a username and password given using Wget. Similar to HTTP authorization, only the Basic authentication scheme is used in practice, despite the existence of other authentication schemes.

Your username and password can be entered using the command-line parameters or the proxy URL. If a company’s proxy server is often found at proxy.company.com on port 8001, a proxy URL with authorization content would look something like this:

http://hniksic:[email protected]:8001/You can also use the equivalent of the ‘proxy-user‘ and ‘proxy-password‘ parameters. To set the proxy username and password, use the .wgetrc settings proxy_user and proxy_password.

You succeeded! Now, use your proxy to Wget your data.

Conclusion

You can now extract nearly everything you want from websites as you are an expert Wget proxy user. Get comfortable with Wget, a free and user-friendly utility that doesn’t seem to be going away anytime soon. The information in this post should have been able to get you started on your trip.

Happy scraping!

I’m Amine, a 34-year-old mobile enthusiast with a passion for simplifying the world of proxy providers through unbiased reviews and user-friendly guides. My tech journey, spanning from dial-up internet to today’s lightning-fast mobile networks, fuels my dedication to demystifying the proxy world. Whether you prioritize privacy, seek marketing advantages, or are simply curious, my blog is your trusted source.