Using a range of methods, such as IP address detection, HTTP request header inspection, CAPTCHAs, javascript controls, etc., it may be difficult to stop online scraping. However, by using a number of similar techniques to get around these limitations, web scraping developers can likewise create virtually uncoverable scrapers. Here are a few brief tips on how to crawl a website without being blocked.

1. Using Headless Browsers

Even the trickiest websites will look for minute data like Internet fonts, extensions, browser cookies, and JavaScript execution to see if the issue is coming from a genuine person. To crawl these websites, you might need to have your own headless browser (or scraping API!).

You may create a program to run a real web browser like a real user while completely avoiding detection by using tools like Puppeteer and Selenium. Simplifying your site will make it easier for crawlers to access it, even though it requires some effort to make Selenium or Puppeteer undetected.

It should be noted that these programmable browsers, if just effective for web scraping, are quite CPU and memory-heavy and will occasionally crash. Using this platform does not require you to target a certain website (where quick GET requests exist). Thus, simply click on some tools if you are unable to crawl a page or if your browser is incompatible!

2. IP Rotation to avoid scraping blocks

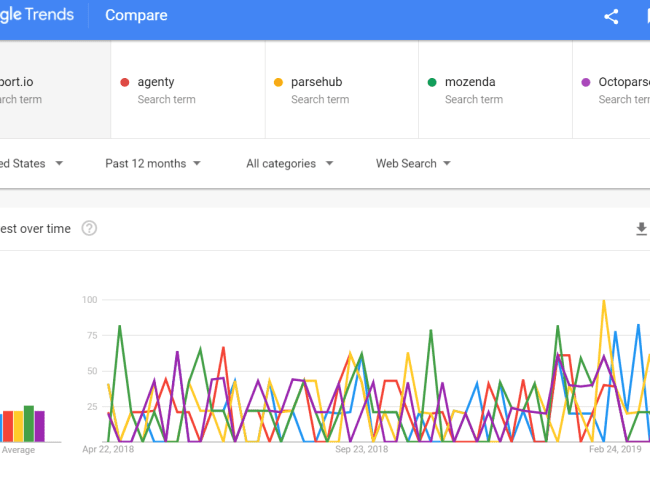

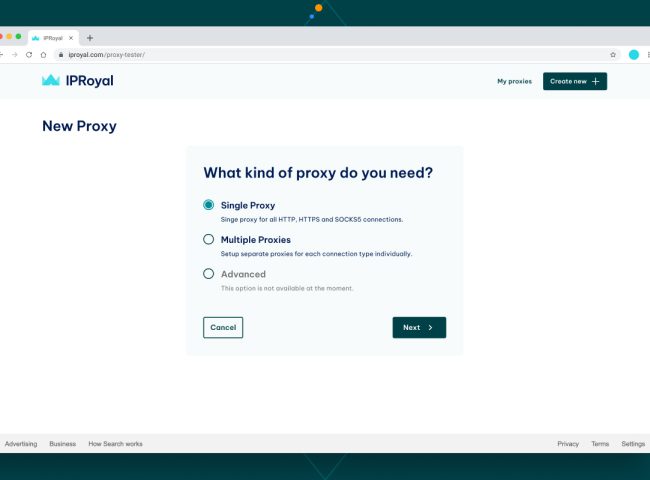

Since IP address analysis is the primary method used by websites to identify web scrapers, most online scrapers that avoid blocking employ many IP addresses to avoid having any one IP address banned. You can use an IP rotation service, like the Scraper API, or other proxy services to route your requests via a range of different IP addresses in order to stop having all of your queries routed through the same IP address. You will be able to scrape most web pages without any issues as a result.

You might need to think about using home or smartphone proxies for locations that utilize more complex proxy blacklists. If you’re not sure what this means, check out our post on different types of brokers here. In the end, there is only a finite number of IP addresses available to users of the Internet, and the majority of them only receive one (the IP address assigned to them by their home Internet provider).

Having said that, you could browse the websites of up to a million regular Internet users with a million IP addresses without raising any red flags. Having multiple IP addresses is the first thing you should do if you get blocked, as this is the most widely used strategy for websites to restrict web spiders.

3. Add Additional Request Headers

A whole host of headers would be set on actual web browsers, all of which can be tested by diligent websites to obstruct web scraping. You should navigate to https:/httpbin.org/anything to make your scraper appear to be a real browser, then simply copy the headers you see there they are the headers the new web browser is using). Stuff like “Accept”, “Accept-Encoding”, “Accept-Language”, and “Upgrade-Insecure-Requests” being set would make your requests look like they are coming from a real server so you won’t have your web crawling interrupted.

For example, the headers from the latest Google Chrome are:

- “Accept”: “text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,

- image/apng,*/*;q=0.8,application/signed-exchange;v=b3″,

- “Accept-Encoding”: “gzip”,

- “Accept-Language”: “en-US,en;q=0.9,es;q=0.8”,

- “Upgrade-Insecure-Requests”: “1”,

- “User-Agent”: “Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.132 Safari/537.36”

You should be able to avoid being detected by 99 percent of websites by rotating through a series of IP addresses and setting proper HTTP request headers (especially User Agents).

4. Set A Real Agent

User Agents are a special form of HTTP header that tells the website exactly what browser you are using while visiting. Any website can review user agents and block user agent requests that are not part of the main browser. Many web scrapers do not try to customize the User Agent and are thus quickly found when looking for disabled User Agents.

Don’t be one of the makers of this! Remember to set your web crawler as a popular user agent (you can find a list of popular user agents here). For advanced users, as most websites choose to be mentioned on Google and thus allow Googlebot in, you can even set your User Agent to the Googlebot User Agent.

Any new upgrade to Google Chrome, Safari, Firefox, etc. has a totally different user agent, but if you go years without updating the user agent on your crawlers, they can get more and more suspect. It’s crucial to keep the user agents you use up to date.

5. Avert Honeypot Traps

Many websites use invisible connections that are only detectable by bots in an attempt to track down web crawlers. You must determine whether the link is using the CSS property pack visibility:secret or display:zero. If not, a website can recognize you as a programmatic crawler, track the characteristics of your request, and block you without delay if you don’t click on the link.

One of the simplest methods for seasoned webmasters to detect crawlers is through the use of honeypots. Thus, be sure to perform this search on each page you visit. The color is often only set to white (or another backdrop color for the website) by experienced webmasters. As a result, you ought to see if the URL has something similar to “Color: #fff;” Alternately, as the link may also be virtually invisible, set “Color: #ffffff”.

6. Detect Website Changes

For a variety of reasons, many websites change their templates, which can potentially result in scraper cracking. In contrast, some websites may have different layouts in strange locations (for instance, the search results page 1 interface may be different from page 4).

This also holds true for unexpectedly large, less tech-savvy companies, like the giant department stores that are currently moving their operations online. In order to verify that your crawler is still operating, you must appropriately detect these changes while designing your scraper and establish continuous tracking (typically, this just entails counting the number of active requests every crawl).

One more straightforward method for testing configuration is to create a web unit test for a single URL (or one URL type per sort; for instance, you may wish to create a unit test for the search results page of a review website, a review page, a main product page, etc.).

In this manner, you may check for breaking site alterations with a few requests every 24 hours or so, instead of needing to crawl the entire site in order to detect issues.

Conclusion

Hopefully, this post has given you some useful advice for scraping well-known websites without getting you blocked or IP banned. Even though setting up IP rotation and appropriate HTTP request headers should be sufficient in most cases, you may occasionally need to use more advanced techniques, such headless browsers or Google cache scraping, to obtain the information you require.

As always, when scraping, you need to be mindful of webmasters and other site visitors. If you see that the site is lagging, you should slow down the request rate. This is especially important when scraping smaller websites that do not have the web hosting resources available to larger companies.

Happy scraping!

I’m Amine, a 34-year-old mobile enthusiast with a passion for simplifying the world of proxy providers through unbiased reviews and user-friendly guides. My tech journey, spanning from dial-up internet to today’s lightning-fast mobile networks, fuels my dedication to demystifying the proxy world. Whether you prioritize privacy, seek marketing advantages, or are simply curious, my blog is your trusted source.